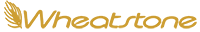

Introducing Wheatstone Layers Software Suite. Mixing, Streaming, and FM/HD Processing in an Instance.

Introducing Layers Software Suite from Wheatstone. Add multiple mix, FM/HD processing and stream instances to any server in your rack room or off-site at an AWS or other cloud extension of your WheatNet-IP audio network.

WheatNet-IP is an IP Networked End-to-End System

To meet the deadlines and demands of broadcasting today, we’ve made our IP consoles far more powerful, yet easier to navigate by offloading all audio routing, sourcing, and logic functions to the WheatNet-IP audio network. WheatNet-IP is an IP audio network made up of I/O BLADEs that form a distributed network of functions and elements for almost unlimited reliability, scalability and flexibility. WheatNet-IP is an AES67 compatible, complete studio system with audio transport, control, and audio toolset to enable exceptionally intelligent deployment and operation.

Here is what that means to you:

Free AoIP for TV E-Book!

Putting together a new studio? Updating an existing studio?

We've put together this IP Audio for TV Production and Beyond e-book with fresh info and some of the articles that we've authored for our website, white papers, and news that dives into some of the cool stuff you can do with a modern AoIP network like Wheatstone's WheatNet-IP. And it's FREE to download!

Intelligent Distributed Routing

Intelligent Distributed Routing

WheatNet-IP utilizes AoIP to distribute audio intelligently to devices across scaleable networks, enabling all sources to be available to, and controlled from, any and all devices. WheatNet-IP is AES67 compatible, yet is unique in that it represents an entire decentralized end-to-end solution, complete with audio transport, full control, and a toolset to enable exceptionally intelligent deployment and operation. It handles all audio formats - HD/SDI, AES, MADI, AoIP, and Analog - to provide completely seamless operation in your broadcast/production chain.

Advanced Control Hardware

Advanced Control Hardware

Wheatstone’s control surfaces provide complete mixing tools for interacting with our WheatNet-IP Intelligent Network and are distinguished from smallest to largest only by the size and scope of your needs. All are designed expressly for use with the working preferences of TV audio professionals. Our Series Two is our most modest, with a tiny footprint and controls suited to live and automated TV. Our Arcus is our largest and offers our most advanced feature set. All embrace current standards, such as SMPTE 2110, AES67, and are open ended to incorporate any future standards.

Automation Control Interface

Automation Control Interface

Grass Valley Ignite. Ross Overdrive. Sony ELC. Mosart – all are systems that allow for automated production of live news. Wheatstone's Automation Control Interface works with all of those, and many more, to allow audio to function in a console-free environment. For this automated programming, we offer a VMI (Virtual Mixing Interface) along with GLASS-E, a virtual control surface that allows remote control when necessary, via the internet. With Wheatstone, audio can follow or call the shots as you decide.

Long & Short Haul Sports Coverage

Long & Short Haul Sports Coverage

Whether you're covering a story across town, or handling audio for a Sunday game from 3000 miles away, Wheatstone has the WAN solution you need. At-Home, or REMI (remote-integration model) applications have been growing as alternatives to full broadcast production vans for covering sports. Smart BLADE-3 interfaces can deploy dozens of audio services, as well as provide multiple control and automation capabilities including latency-free venue-side IFB. And they interface seamlessly with systems from Artel and others to carry audio and video from venue to production.

IFB & More Built In

IFB & More Built In

Native to every BLADE interface is the means to create a completely decentralized IFB system with no 3rd party hardware. The speed of our network means there is truly virtually no latency when using the intercom functions, so real-time audio is a reality. Taking it further, these same tools create a virtually unlimited number of mix minuses, speaker muting, and more essential audio-for-TV features.

Flexibility & Format Interoperation

Flexibility & Format Interoperation

WheatNet-IP is a complete end-to-end audio ecosystem and as such can handle most of your audio needs comfortably. It's also capable of being format agnostic and can easily handle audio format conversion on the fly. Interfacing with different networking systems happens seamlessly in realtime. Audio formats such as HD/SDI, AES, MADI, AoIP, and Analog or, different protocols, such as Dante, Ravenna, Livewire, etc, are accommodated though AES67, which is part of the SMPTE 2110-30.

Top End Performance

Top End Performance

WheatNet-IP is unique in the network world in the way it handles traffic. No audio is passed or available on the network until it is requested. And when that request is closed, so is that audio channel. This dramatically reduces congestion and the possibility of packet collision/failure. Plus, it's the only network that operates at full Gigabit Ethernet rates, which means extremely low latency for realtime monitoring.

Networkable Audio Processing

Networkable Audio Processing

Built into every BLADE are stereo processors that can be deployed anywhere on the network. This provides a cost-effective solution to audio correction and/or sweetening for remote feeds, audio from different studios, call-ins, and more. Additionally, Wheatstone’s M-1, M-2, and M4-IP mic processors have become absolute standards in the broadcast industry.

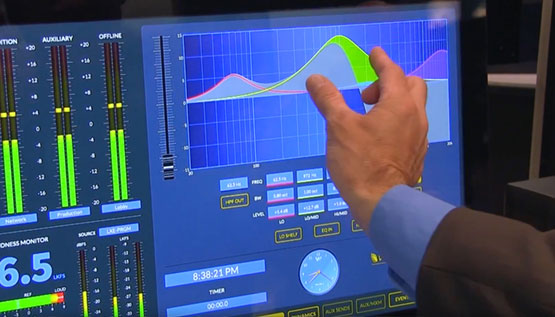

Software for True Customization

Software for True Customization

Engineers never cease to amaze us with their ingenuity, so to that end, we develop our hardware and software to encourage exploration and customization. ScreenBuilder is a very powerful software platform that allows you to create just about any on-screen control environment you can imagine. Think of it as a control construction kit that lets you put your most inventive ideas to work. IP Meters gives you the ability to create screens with banks of meters for viewing anywhere in your facility. And Navigator provides a system overview that lets you setup your workflows and create macros or salvos that can facilitate complete changeups with the push of a button.

And We Include a Rack's Worth of Tools... Virtually, Of Course

A network needs more than basic routing. It needs optimal control and integrated tools to get the most out of it. We know this from years of building networks and working with broadcast facilities to deliver their vision of how their work needs to flow. So, we build an entire rack's worth of tools into every BLADE interface we make. Mixers, processors, logic tools, routing tools, and tools that defy description. If you can think it, you can make it happen with WheatNet-IP.

• Full Crosspoint Routing of Audio and Logic

• Send and receive AES67 streams

• Handles multiple audio formats (Analog, AES, MADI, HD/SDI)

• Create, Store, and Fire Routing Salvos

• Software based mixing built into each BLADE

• HD/SDI De-embedding

• Mix Minus creation

• IFB using triggered crosspoint control

• Routable EQ and Dynamics channels

• GPIO on each BLADE for triggered functions (salvos, IFB, Crosspoint control)

• Software Logic Ports for Control over IP

• Built in Audio Clip Player

Wheatstone TV Audio Consoles

Wheatstone TV audio consoles pack in a lot of efficiency. We moved everything routing and logic related to the network, and replaced the gargantuan consoles of the past with these efficient, compact network consoles. All function and no bloat, Wheatstone audio consoles are built broadcast tough.

Arcus

This digital mixing console features IP networking and a touchscreen interface that recognizes smartphone-style gestures. The Arcus large-format mixing console is a solid, intuitively laid out surface that's easy to operate.

Strata

Wheatstone’s Strata audio console packs 64 channels and the latest IP audio innovation into a 40-inch frame that fits most television applications and budgets under US $75,000. It integrates seamlessly with all the major production automation systems and comes with our IP audio mix engine.

Tekton 32

Wheatstone’s Tekton 32 audio console is a full-featured IP audio console that packs the latest IP audio innovations into a super-compact frame that fits even the most stringent television applications and budgets. 32 input channels (layered on 16 physical faders) and a ton of flexibility via the most advanced and reliable IP audio network in broadcast...all in a 39" x 17" x 3⅛" frame.

Virtual/Remote Strata

Virtual Strata is a multi-touch virtual TV audio console with 64 faders onscreen that seamlessly interfaces into major production automation systems, including those by Ross Video, Grass Valley and Sony.

WheatNet-IP Network

WheatNet-IP is a network system that utilizes Internet Protocol to enable audio to be intelligently distributed to devices across scaleable networks. It enables all audio sources to be available to all devices (such as mixing consoles, control surfaces, software controllers, automation devices, etc) and controlled from any and all devices. WheatNet-IP is AES67 compatible, yet is unique in that it represents an entire end-to-end solution, complete with audio transport, full control, and a toolset to enable exceptionally intelligent deployment and operation.

Stagebox One

The 4RU StageBox One extends console I/O, providing 32 mic/line inputs, 16 analog line outputs, and 8 AES3 inputs and 8 AES3 outputs as well as 12 logic ports and two Ethernet ports. Its heavy duty construction makes it adept for on-the-go applications, such as remote sporting events. StageBox One works with all WheatNet-IP audio networked consoles.

Do More. A LOT More.

There's far more to Wheatstone's WheatNet-IP system than simple routing.

The phone comparison is a good analogy – in WheatNet-IP there's a world of audio modification tools and control options that allow you to create solutions unique to your applications with ease. All work with existing standards and are created to evolve with emerging and future standards as well.

It's the evolution of standard routers.

For more information, please give us a call at 252-638-7000 or write us at [email protected]